Do you need to automate error reporting based on recent events and don’t want to use third-party tools? This article describes how to collect events from different sources and unite them in one document using standard Windows instruments only.

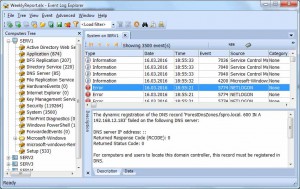

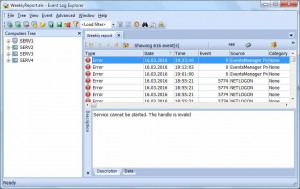

Recently I described how to export events into Excel format using our Event Log Explorer software. However, in some cases, using third-party software can be impossible. This may happen if your company doesn’t have budget to purchase event log utilities, or such utilities are restricted by the company’s rules. In any case, the task of regular exporting the recent events from different machines into one legible file is still crucial. That’s why I will show how you can get the events from different Windows machines and export them into one file for further investigation.

Task

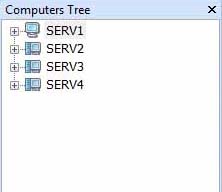

Let’s take the same task we solved previously. We have 4 Windows servers and we need to generate weekly reports of Error and Warning events in Application and System event logs. We should utilize only standard Windows instruments.

Instruments

Microsoft features Windows PowerShell as a framework to automate different administrative tasks and perform configuration management in Windows. My scripts require at least PowerShell version 3.0. If your PowerShell is outdated, you can update it by downloading Windows Management Framework from Microsoft’s site. To check PowerShell version simply type in PowerShell console:

$PSVersionTable.PSVersion

In my case, PowerShell version = 3 which is OK.

Research

To access event logs, Windows PowerShell comes with Get-EventLog cmdlet:

Parameter Set: LogName Get-EventLog [-LogName] <String> [[-InstanceId] <Int64[]> ] [-After <DateTime> ] [-AsBaseObject] [-Before <DateTime> ] [-ComputerName <String[]> ] [-EntryType <String[]> ] [-Index <Int32[]> ] [-Message <String> ] [-Newest <Int32> ] [-Source <String[]> ] [-UserName <String[]> ] [<CommonParameters>]

First we need to define the start date (the date after which we will get events). This date is calculated as today minus 7 days:

$now=get-date

$startdate=$now.adddays(-7)

Now we can read warning and error events from a log for the last week:

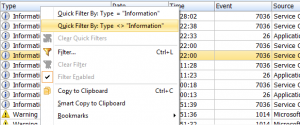

$el = get-eventlog -ComputerName Serv1 -log System -After $startdate -EntryType Error, Warning

Let’s check the result. Just type $el in the console. Yes, we can see events from the event log.

But how will we export the event log? Windows PowerShell doesn’t have cmdlets to export to Excel. But it supports export to CSV file. Let’s try it now:

$el | export-csv eventlog.csv

Yes, it works, but multi-line descriptions ruined the output file.

Maybe export to XML will help?

$el | export-clixml eventlog.xml

But how to display it in clear way? Excel understands XML files, but I have no idea how to interpret it:

I guess we can make an XML transformation to convert this XML into more readable file, but I’m not an XML guru, but I have a more or less useful solution. We can solve our problem if we just export to CSV only several event properties (without event description):

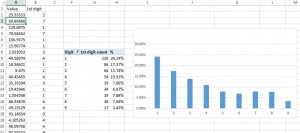

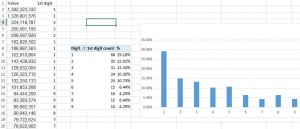

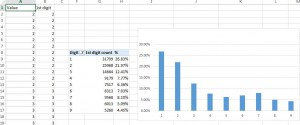

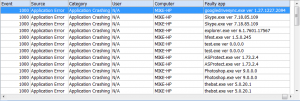

$el |Select EntryType, TimeGenerated, Source, EventID | Export-CSV eventlog.csv -NoTypeInfo

Now we can read eventlog.csv in Excel without problems.

Putting all together

It’s time to write the PowerShell script.

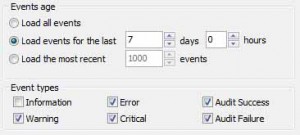

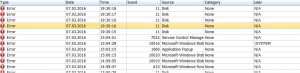

Brief: we will read recent (7 days) error and warning events from Application and System event logs, join them, sort them by time and export to CSV format.

#

# This script exports consolidated and filtered event logs to CSV

# Author: Michael Karsyan, FSPro Labs, eventlogxp.com (c) 2016

#

Set-Variable -Name EventAgeDays -Value 7 #we will take events for the latest 7 days

Set-Variable -Name CompArr -Value @("SERV1", "SERV2", "SERV3", "SERV4") # replace it with your server names

Set-Variable -Name LogNames -Value @("Application", "System") # Checking app and system logs

Set-Variable -Name EventTypes -Value @("Error", "Warning") # Loading only Errors and Warnings

Set-Variable -Name ExportFolder -Value "C:\TEST\"

$el_c = @() #consolidated error log

$now=get-date

$startdate=$now.adddays(-$EventAgeDays)

$ExportFile=$ExportFolder + "el" + $now.ToString("yyyy-MM-dd---hh-mm-ss") + ".csv" # we cannot use standard delimiteds like ":"

foreach($comp in $CompArr)

{

foreach($log in $LogNames)

{

Write-Host Processing $comp\$log

$el = get-eventlog -ComputerName $comp -log $log -After $startdate -EntryType $EventTypes

$el_c += $el #consolidating

}

}

$el_sorted = $el_c | Sort-Object TimeGenerated #sort by time

Write-Host Exporting to $ExportFile

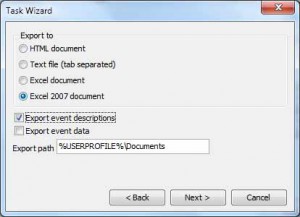

$el_sorted|Select EntryType, TimeGenerated, Source, EventID, MachineName | Export-CSV $ExportFile -NoTypeInfo #EXPORT

Write-Host Done!

Scheduling the task

To run the script, we should run this command:

PowerShell.exe -ExecutionPolicy ByPass -File export-logs.ps1

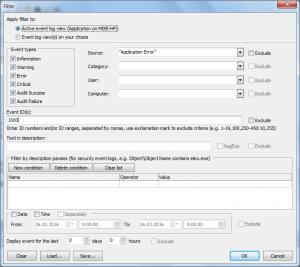

We can open Windows scheduler GUI to make this task, or use PowerShell console:

Microsoft recommends this way to schedule a PowerShell script:

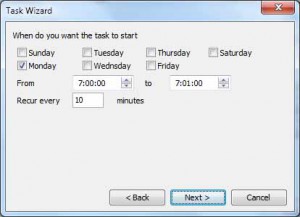

$Trigger=New-JobTrigger -Weekly -At “7:00AM” -DaysOfWeek “Monday”

Register-ScheduledJob -Name “Export Logs” -FilePath “C:\Test\export-logs.ps1” -Trigger $Trigger

But this may miswork, because it adds to Windows Task Scheduler the following action:

powershell.exe -NoLogo -NonInteractive -WindowStyle Hidden -Command “Import-Module PSScheduledJob; $jobDef = [Microsoft.PowerShell.ScheduledJob.ScheduledJobDefinition]::LoadFromStore(‘Export Logs’, ‘C:\Users\Michael\AppData\Local\Microsoft\Windows\PowerShell\ScheduledJobs’); $jobDef.Run()”

If your policy prevents running PoweShell scripts, our export script won’t run because powershell parameters miss -ExecutionPolicy option.

That’s why I will use ScriptBlock instead of FilePath. This code does the trick:

$trigger=New-JobTrigger -Weekly -At "7:00AM" -DaysOfWeek "Monday"

$action="PowerShell.exe -ExecutionPolicy ByPass -File c:\test\export-logs.ps1"

$sb=[Scriptblock]::Create($action)

Register-ScheduledJob -Name "Export Logs" -ScriptBlock $sb -Trigger $trigger

Note that to run Register-ScheduledJob cmdlet, you need to start PowerShell elevated.

That’s all. Now you should have a task that runs every Monday at 7:00, collects events from your servers and exports them to CSV files.

Conclusion

As you can see, the problem of exporting events to Excel can be solved without third-party tools. This method is somewhat limited, but it works.